Search-Based Relevance Association with Auxiliary Contextual Cues

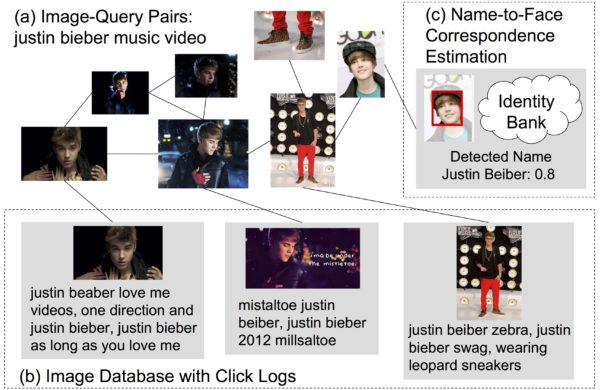

In this work, we target at solving the Bing challenge provided by Microsoft. The task is to design an effective and efficient measurement of query terms in describing the images (image-query pairs) crawled from the web. We observe that the provided image-query pairs (e.g., text-based image retrieval results) are usually related to their surrounding text; however, the relationship between image content seems to be ignored. Hence, we attempt to integrate the visual information for better ranking results. In addition, we found that plenty of query terms are related to people (e.g., celebrity) and user might have similar queries (click logs) in the search engine. Therefore, in this work, we propose a relevance association by investigating the effectiveness of different auxiliary contextual cues (i.e., face, click logs, visual similarity). Experimental results show that the proposed method can have 16% relative improvement compared to the original ranking results. Especially, for people-related queries, we can further have 45.7% relative improvement.

The works wins ACM Multimedia 2013 Grand Challenge Multimodal Award and FIRST PRIZE in MSR-Bing Image Retrieval Challenge.