Free-form Video Inpainting with 3D Gated Convolution and Temporal PatchGAN

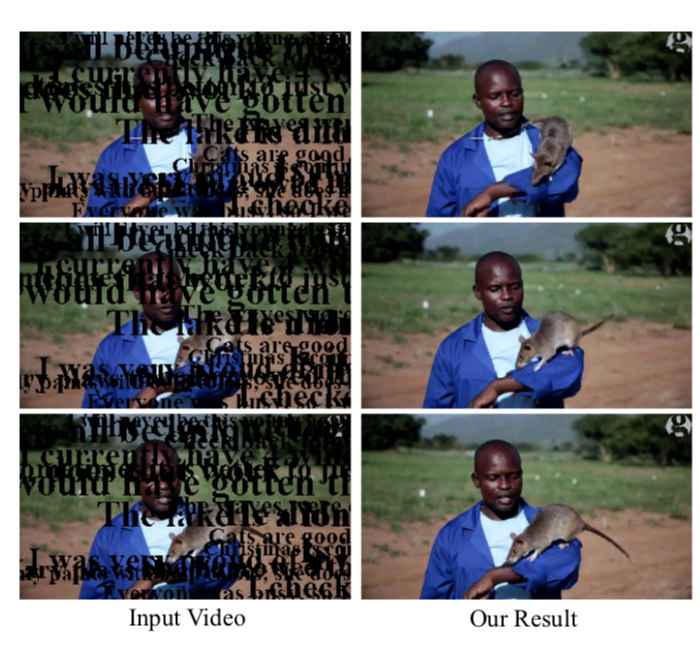

Free-form video inpainting is a very challenging task that could be widely used for video editing such as text re- moval (see Fig. 1). Existing patch-based methods could not handle non-repetitive structures such as faces, while directly applying image-based inpainting models to videos will result in temporal inconsistency (see videos). In this paper, we introduce a deep learning based free-form video inpainting model, with proposed 3D gated convolutions to tackle the uncertainty of free-form masks and a novel Temporal PatchGAN loss to enhance temporal consistency. In addition, we collect videos and design a free-form mask generation algorithm to build the free-form video inpainting (FVI) dataset for training and evaluation of video in-painting models. We demonstrate the benefits of these components and experiments on both the FaceForensics and our FVI dataset suggest that our method is superior to existing ones. Related source code, full-resolution result videos and the FVI dataset could be found on Github (https://github.com/amjltc295/Free-Form-Video-Inpainting).