Unsupervised Auxiliary Visual Words Discovery for Large-Scale Image Object Retrieval

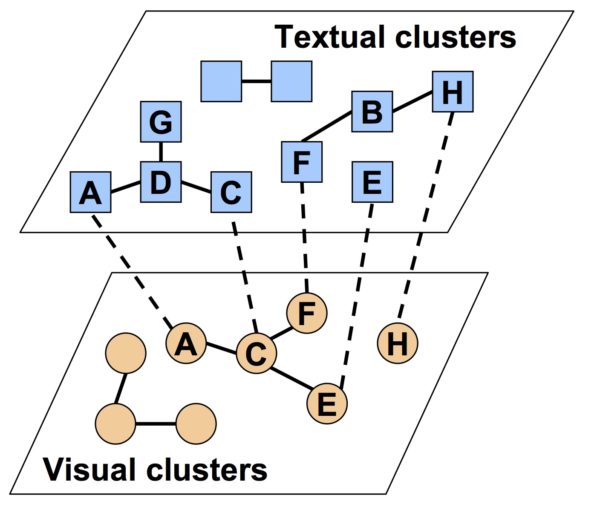

Image object retrieval-locating image occurrences of specific objects in large-scale image collections-is essential for manipulating the sheer amount of photos. Current solutions, mostly based on bags-of-words model, suffer from low recall rate and do not resist noises caused by the changes in lighting, viewpoints, and even occlusions. We propose to augment each image with auxiliary visual words (AVWs), semantically relevant to the search targets. The AVWs are automatically discovered by feature propagation and selection in textual and visual image graphs in an unsupervised manner. We investigate variant optimization methods for effectiveness and scalability in large-scale image collections. Experimenting in the large-scale consumer photos, we found that the the proposed method significantly improves the traditional bag-of-words (111% relatively). Meanwhile, the selection process can also notably reduce the number of features (to 1.4%) and can further facilitate indexing in large-scale image object retrieval.