Saliency Aware: Weakly Supervised Object Localization

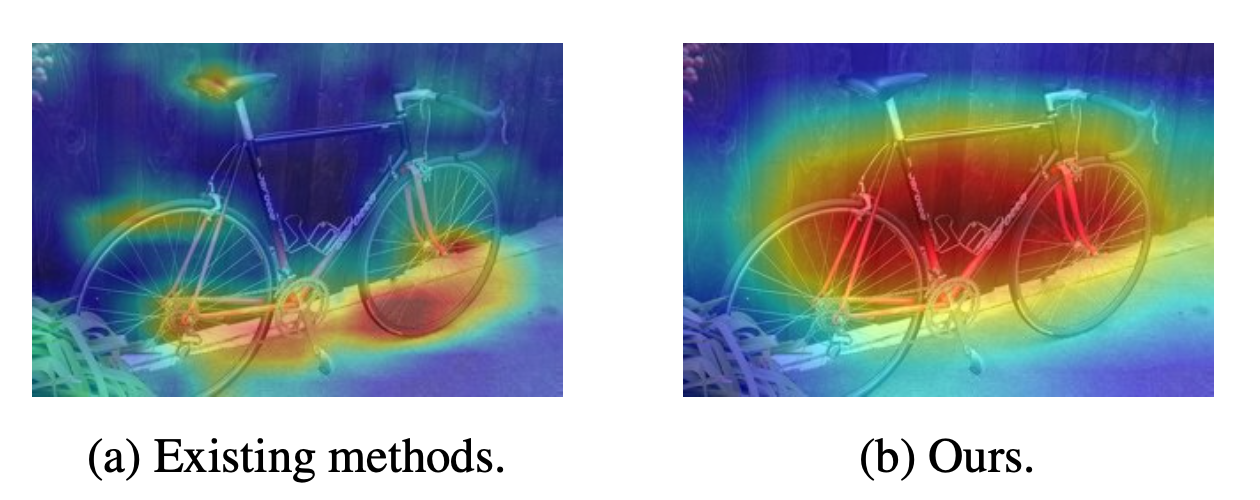

Object localization aims at localizing the object in a given image. Due to the recent success of convolutional neural networks (CNNs), existing methods have shown promising results in weakly-supervised learning fashion. By training a classifier, these methods learn to localize the objects by visualizing the class discriminative localization maps based on the classification prediction. However, correct classification results would not guarantee sufficient localization performance since the model may only focus on the most discriminative parts rather than the entire object. To address the aforementioned issue, we propose a novel and end-to-end trainable network for weakly-supervised object localization. The key insights to our algorithm are two-fold. First, to encourage our model to focus on detecting foreground objects, we develop a salient object detection module. Second, we propose a perceptual triplet loss that further enhances the foreground object detection capability. As such, our model learns to predict objectness, resulting in more accurate localization results. We conduct experiments on the challenging ILSVRC dataset. Extensive experimental results demonstrate that the proposed approach performs favorably against the state-of-the-arts.