Reranking Methods for Visual Search

IEEE Multimedia Magazine, Volume 14, Issue 3, Pages 14-22, July-September 2007

Publication year: 2007

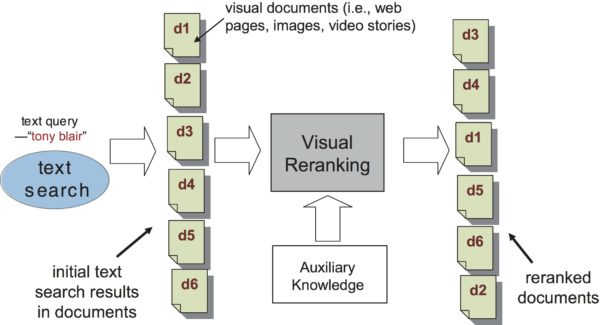

Most semantic video search methods use text-keyword queries or example video clips and images. But such methods have limitations. To address the problems of example-based video search approaches and avoid the use of specialized models, we conduct semantic video searches using a reranking method that automatically reorders the initial text search results based on visual cues and associated context. We developed two general reranking methods that explore the recurrent visual patterns in many contexts, such as the returned images or video shots from initial text queries, and video stories from multiple channels.