Preference-Aware View Recommendation System for Scenic Photos Based on Bag-of Aesthetics-Preserving Features

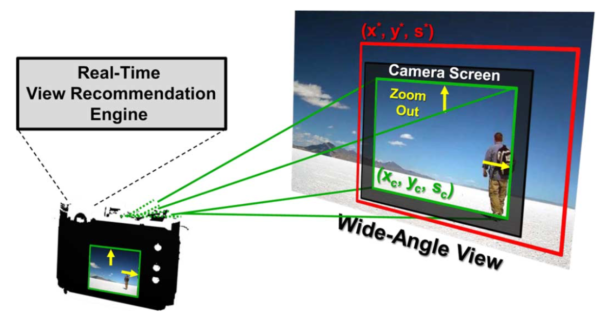

In this paper, the framework for a real-time view recommendation system is proposed. The proposed system comprises two parts: offline aesthetic modeling stage and efficient online aesthetic view finding process. A preference-aware aesthetic model is proposed to suggest views according to varied user-favorite photographic styles, where a bottom-up approach is developed to construct an aesthetic feature library with bag-of-aesthetics-preserving features instead of top-down methods that implement the heuristic guidelines (rule-specific features) listed in photography literatures, which is employed in previous works. A collection of scenic photos is used as the test set; however, the proposed method can be employed to other types of photo collection according to different application scenarios. The proposed model can cover both implicit and explicit aesthetic features and can adapt to users’ preferences with a learning process. In the second part, the learned model is employed in a view finder to help the user to locate the most aesthetic view while taking a photograph. The experimental results show that the proposed features in the library (92.06% in accuracy) outperform the state-of-the-art rule-specific features (83.63% in accuracy) significantly in the photo aesthetic quality classification task, and the rule-specific features are also proved to be encompassed by the proposed features. Meanwhile, it is observed from experiments that the features extracted for contrast information are more effective than those for absolute information, which is consistent with the properties of human visual systems. Furthermore, the user studies for the view recommendation task confirm that the suggested views are consistent with users’ preferences (81.25% agreements).