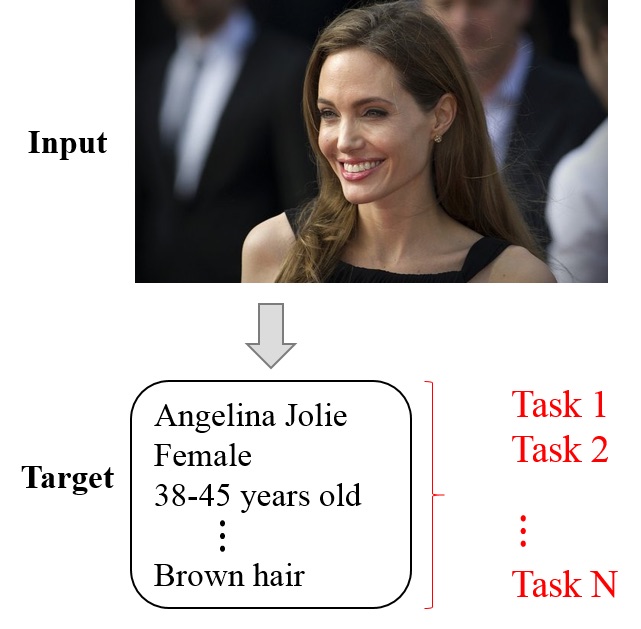

Multi-task learning for face identification and attribute estimation

Convolution neural network (CNN) has been shown as one of state-of-the-art approaches for learning face representations. However, previous works only utilized identity information instead of leveraging human attributes (e.g., gender and age) which contain high-level semantic meaning. In this work, we aim to incorporate identity and human attributes in learning discriminative face representations through multi-task learning. In our experiments, we learn face representation by using the largest publicly face dataset CASIA-WebFace with gender and age labels, and then evaluate learned features on widely-used LFW benchmark for face verification and identification. We also compare the effectiveness of different attributes for improving face identification. The results show that the proposed model outperforms the baseline CNN method without using multi-task learning and hand-crafted features such as high-dimensional LBP. We also do experiments on gender and age estimation on Adience benchmark to demonstrate that human attribute prediction can also benefit from the proposed multi-task representation learning.