Keyword-Based Concept Search on Consumer Photos by Web-based Kernel Function

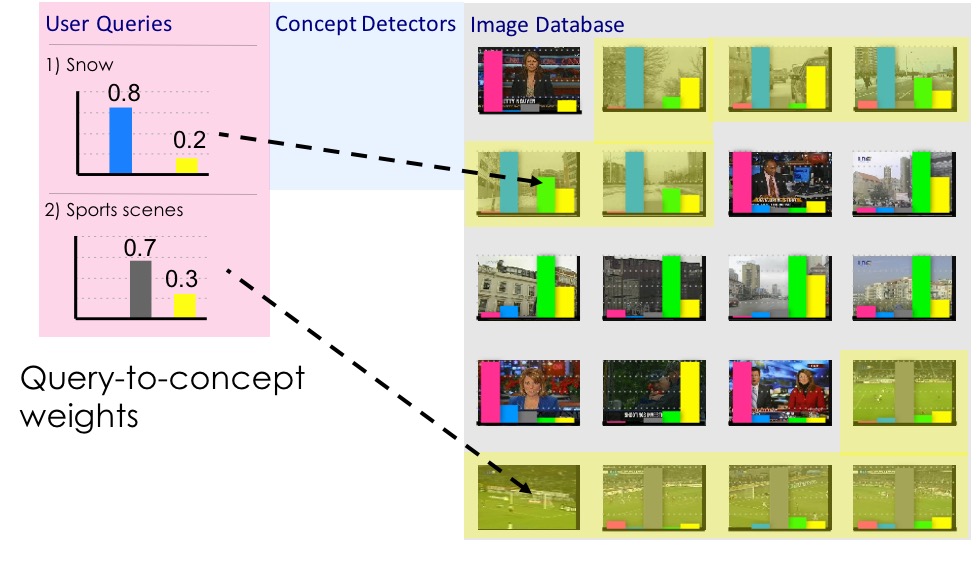

In light of the strong demands for semantic search over large-scale consumer photos, which generally lack reliable user-provided annotations, we investigate the feasibility and challenges entailed by the new paradigm, concept search – retrieving visual objects by large-scale automatic concept detectors with keywords. We investigate the problem in three folds: (1) the effective concept mapping and selection methods over large-scale concept ontology; (2) the quality and feasibility of the pre-trained concept detectors applying on cross-domain consumer data (i.e., Flickr photos); (3) the search quality by fusing automatic concepts and user-annotated data (tags). Through experiments over large-scale benchmarks, TRECVID and Flickr550, we confirm the effectiveness of concept search in the proposed framework, where the semantic mapping by web-based kernel function over Google snippets significantly outperforms conventional WordNet-like methods both in accuracy and efficiency.