GPS, Compass, or Camera?: Investigating Effective Mobile Sensors for Automatic Search-Based Image Annotation

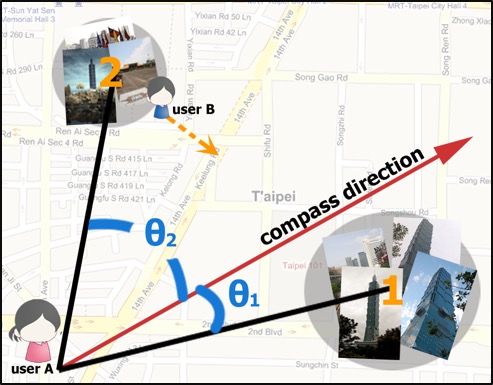

Recently, more and more types of sensors are being equipped on the smart phones, which provide different aspects into conside-ration. When a user takes a photo, the information it provides like the image content, the location and even the direction the user faces can help us to understand the photo itself. Each factor mentioned above can be treated as an input to the image search system. However, most existing algorithms for image retrieval (or annotation) only focus on the content and location information of the images yet completely ignore the important direction-facing factor and lack of the insights of the capabilities for the sensors. In this paper, we propose a novel ranking algorithm that can leverage different sensors with traditional content-based image retrieval system, and further apply to annotate images. We evaluate different combinations of sensors and investigate how the geolocation, image content and compass direction influence on image retrieval.