Face Recognition and Retrieval Using Cross-Age Reference Coding With Cross-Age Celebrity Dataset

Abstract:

This paper introduces a method for face recognition across age and also a dataset containing variations of age in the wild. We use a data-driven method to address the cross-age face recognition problem, called cross-age reference coding (CARC). By leveraging a large-scale image dataset freely available on the Internet as a reference set, CARC can encode the low-level feature of a face image with an age-invariant reference space. In the retrieval phase, our method only requires a linear projection to encode the feature and thus it is highly scalable. To evaluate our method, we introduce a large-scale dataset called cross-age celebrity dataset (CACD). The dataset contains more than 160 000 images of 2,000 celebrities with age ranging from 16 to 62. Experimental results show that our method can achieve state-of-the-art performance on both CACD and the other widely used dataset for face recognition across age. To understand the difficulties of face recognition across age, we further construct a verification subset from the CACD called CACD-VS and conduct human evaluation using Amazon Mechanical Turk. CACD-VS contains 2,000 positive pairs and 2,000 negative pairs and is carefully annotated by checking both the associated image and web contents. Our experiments show that although state-of-the-art methods can achieve competitive performance compared to average human performance, majority votes of several humans can achieve much higher performance on this task. The gap between machine and human would imply possible directions for further improvement of cross-age face recognition in the future.

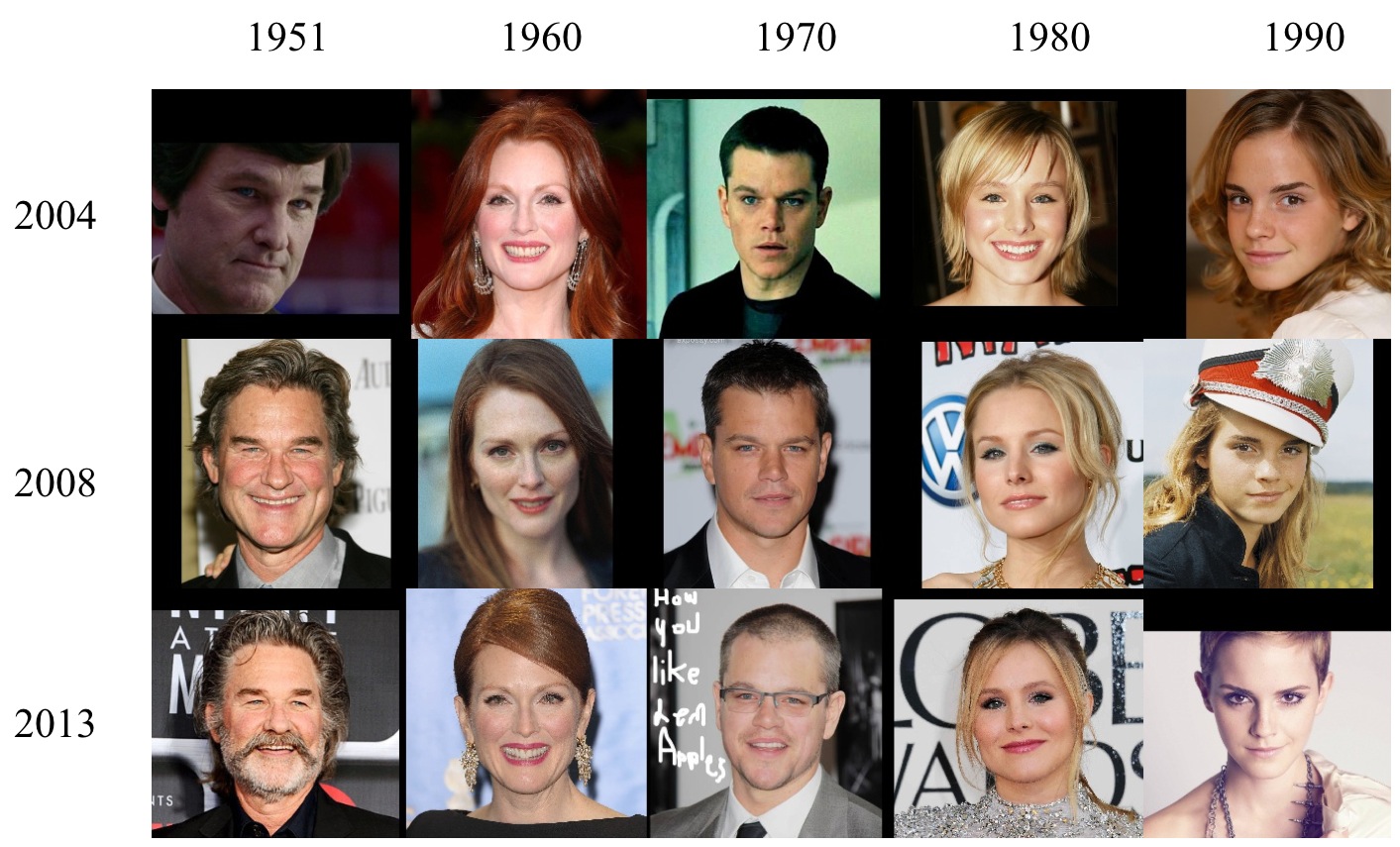

Cross-Age Celebrity Dataset (CACD)

Cross-Age Celebrity Dataset (CACD) contains 163,446 images from 2,000 celebrities collected from the Internet. The images are collected from search engines using celebrity name and year (2004-2013) as keywords. We can therefore estimate the ages of the celebrities on the images by simply subtract the birth year from the year of which the photo was taken.