Enhancing Sparse Voice Annotation For Semantic Retrieval Of Personal Photos By Continuous Space Word Representations

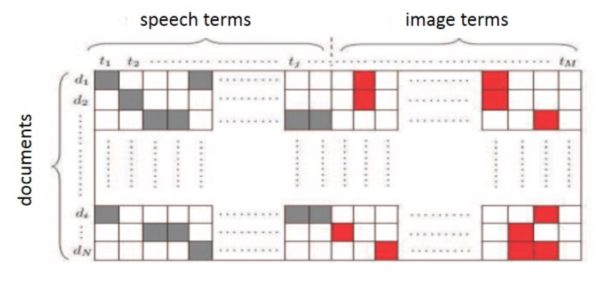

It is very attractive for the user to retrieve photos from a huge collection using high-level personal queries (e.q. uncle Bill’s house), but technically very challenging. The previous work proposed a set of approaches to achieve the goal assuming only 30% of the photos are annotated by sparse spoken descriptions when the photos are taken. This includes fusing the sparse spontaneously spoken features with visual features of the photos by non-negative matrix factorization (NMF), and enhancing the results with two-layer mutually reinforced random walk. However, because the speech annotation is very sparse, the retrieval is very often dominated by the very complete visual features. In this paper, we propose to use continuous space word representations to extend the sparse speech information and expand the photo representation to enhance the retrieval model. Very encouraging improvements were observed in the preliminary experiments.