Discovering the City by Mining Diverse and Multimodal Data Streams

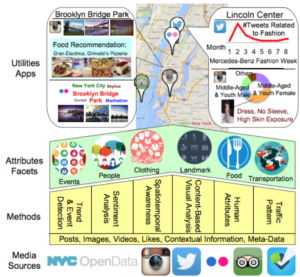

This work attempts to tackle the IBM grand challenge – seeing the daily life of New York City (NYC) in various perspectives by exploring rich and diverse social media content. Most existing works address this problem relying on single media source and covering limited life aspects. Because different social media are usually chosen for specific purposes, multiple social media mining and integration are essential to understand a city comprehensively. In this work, we first discover the similar and unique natures (e.g., attractions, topics) across social media in terms of visual and semantic perceptions. For example, Instagram users share more food and travel photos while Twitter users discuss more about sports and news. Based on these characteristics, we analyze a broad spectrum of life aspects – trends, events, food, wearing and transportation in NYC by mining a huge amount of diverse and freely available media (e.g., 1.6M Instagram photos, 5.3M Twitter posts). Because transportation logs are hardly available in social media, the NYC Open Data (e.g., 6.5B subway station transactions) is leveraged to visualize temporal traffic patterns. Furthermore, the experiments demonstrate that our approaches can effectively overview urban life with considerable technical improvement, e.g., having 16% relative gains in food recognition accuracy by a hierarchy cross-media learning strategy, reducing the feature dimensions of sentiment analysis by 10 times without sacrificing precision.

The work is also awarded ACM Multimedia 2014 Grand Challenge Multimodal Award.