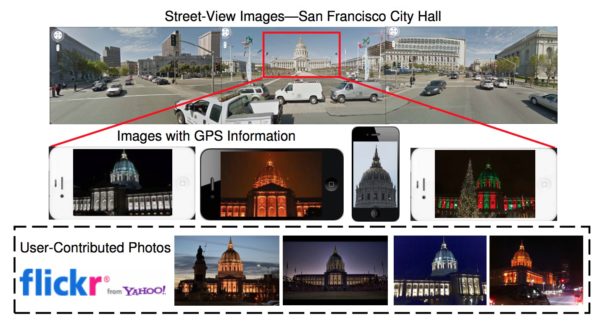

Augmenting mobile city-view image retrieval with context-rich user-contributed photos

With the growth of mobile devices, the needs for location-based services are emerging. Taking the advantage of the GPS information, we can roughly estimate a user’s location. However, it is necessary to leverage extra information (e.g., photos) to precisely locate the object of interest through mobile devices for further applications such as mobile search. Users can simply take a picture (with GPS enabled) of an interesting target to retrieve the building information. Therefore, the raise of real-time building recognition or retrieval system becomes a challenging problem. The most recent approaches are to recognize buildings by the street-view images; however, the query photos from mobile devices usually contain different lighting conditions. In order to provide a more robust city-view image retrieval system, we propose to augment the visual diversity of database images by integrating the context-rich user-contributed photos from social media. Preliminary experimental results show that the street-view images can provide different angles of the target whereas the user-contributed photos can enhance the diversity of the target. Besides, for the real-time retrieval system, we also combine both visual and GPS constraints in the retrieval process on inverted indexing so that we can achieve a real-time retrieval system.