Augmenting flower recognition by automatically expanding training data from web

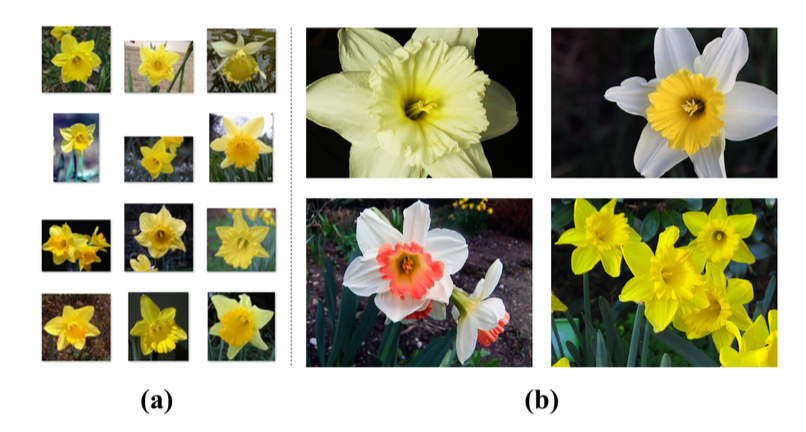

Aiming to improve recognition rate, we propose a novel flower recognition system that automatically expands the training data from large-scale unlabeled image pools without human intervention. Existing flower recognition approaches often learn classifiers based on a small labeled dataset. However, it is difficult to build a generalizable model (e.g., for real-world environment) with only a handful of labeled training examples, and it is labor-intensive for manually annotating large-scale images. To resolve these difficulties, we propose a novel framework that automatically expands the training data to include visually diverse examples from large-scale web images with minimal supervision. Inspired by co-training methods, we investigate two conceptually independent modalities (i.e., shape and color) that provide complementary information to learn our discriminative classifiers. Experimental results show that the augmented training set can significantly improve the recognition accuracy (from 65.8% to 75.4%) with a very small initially labeled training set. We also conduct a set of sensitivity tests to analyze different learning strategies (i.e., co-training and self-training) and show that co-training is more efficient in our multi-view flower dataset.

Leave a Reply