Anticipation of Human Actions With Pose-Based Fine-Grained Representations

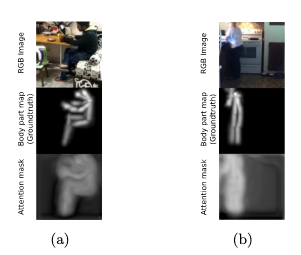

Anticipating an action that is about to happen allows us to be more efficient in interacting with our environment. However, prediction is a challenging task in computer vision, because videos are only partially available when a decision is to be made. Complicating the issue is that it is not always clear which of the visible activities in the scene are relevant to the action, and which ones are not. We suggest that the key to recognizing an action lies with the human actors ,and that it is therefore necessary for the prediction process to attend to persons in a scene. In our work, we extract fine-grained features on visible human actors and predict the future via an L2-regression in feature space. This allows the regressed future feature to focuson the actor. Using this, the future action is classified. More specifically, the fine-grained extraction is guided by a pose prediction system that models current and future human poses in the scene. We run qualitative and quantitative experiments on the Charades dataset, and initial results show that our system improves action prediction.