A Unified Point-Based Framework for 3D Segmentation

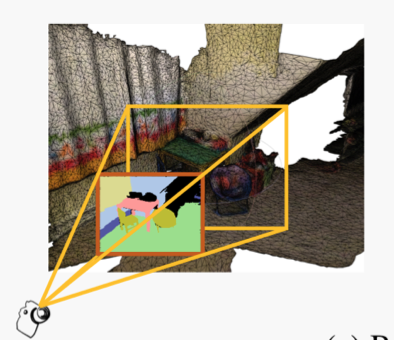

3D point cloud segmentation remains challenging for structureless and textureless regions. We present a new uni- fied point-based framework for 3D point cloud segmenta- tion that effectively optimizes pixel-level features, geometri- cal structures and global context priors of an entire scene. By back-projecting 2D image features into 3D coordinates, our network learns 2D textural appearance and 3D struc- tural features in a unified framework. In addition, we inves- tigate a global context prior to obtain a better prediction. We evaluate our framework on ScanNet online benchmark and show that our method outperforms several state-of-the- art approaches. We explore synthesizing camera poses in 3D reconstructed scenes for achieving higher performance. In-depth analysis on feature combinations and synthetic camera pose verifies that features from different modalities benefit each other and dense camera pose sampling further improves the segmentation results.