Online Reranking via Ordinal Informative Concepts for Context Fusion in Concept Detection and Video Search

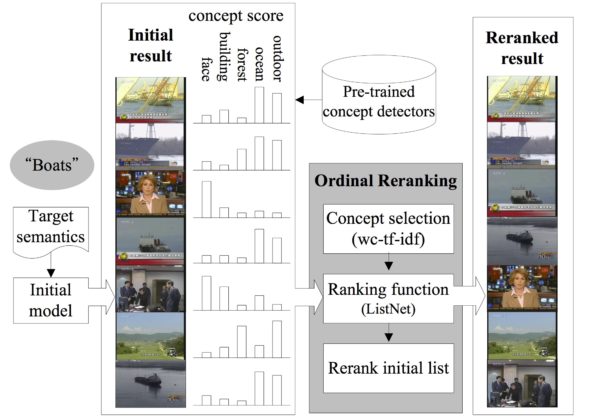

o exploit the co-occurrence patterns of semantic concepts while keeping the simplicity of context fusion, a novel reranking approach is proposed in this paper. The approach, called ordinal reranking, adjusts the ranking of an initial search (or detection) list based on the co-occurrence patterns obtained by using ranking functions such as ListNet. Ranking functions are by nature more effective than classification-based reranking methods in mining ordinal relationships. In addition, the ordinal reranking is free of the ad hoc thresholding for noisy binary labels and requires no extra offline learning or training data. To select informative concepts for reranking, we also propose a new concept selection measurement, wc-tf-idf, which considers the underlying ordinal information of ranking lists and is thus more effective than the feature selection algorithms for classification. Being largely unsupervised, the reranking approach to context fusion can be applied equally well to concept detection and video search. While being extremely efficient, ordinal reranking outperforms existing methods by up to 40% in mean average precision (MAP) for the baseline text-based search and 12% for the baseline concept detection over TRECVID 2005 video search and concept detection benchmark.