Learning by Expansion: Exploiting Social Media for Image Classification with Few Training Examples

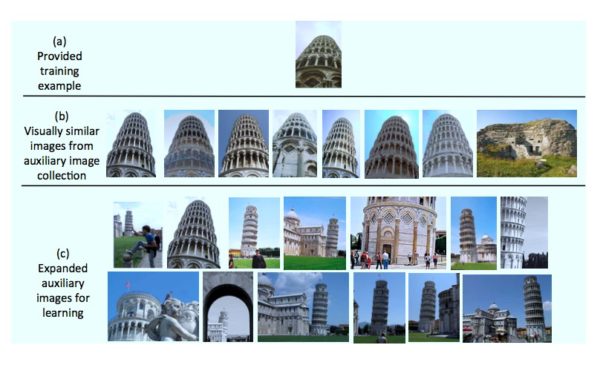

Witnessing the sheer amount of user-contributed photos and videos, we argue to leverage such freely available image collections as the training images for image classification. We propose an image expansion framework to mine more semantically related training images from the auxiliary image collection provided with very few training examples. The expansion is based on a semantic graph considering both visual and (noisy) textual similarities in the auxiliary image collections, where we also consider scalability issues (e.g., MapReduce) as constructing the graph. We found the expanded images not only reduce the time-consuming (manual) annotation efforts but also further improve the classification accuracy since more visually diverse training images are included. Experimenting in certain benchmarks, we show that the expanded training images improve image classification significantly. Furthermore, we achieve more than 27% relative improvement in accuracy compared to the state-of-the-art training image crowdsourcing approaches by exploiting media sharing services (such as Flickr) for additional training images.